Introduction

The transport layer in both the Internet and OSI models is responsible for end-to-end communications. That means that it provides the layers above it with a service that moves data from the sender to the receiver without regard to the intervening network, which is handled by the Network Layer. So the transport layer views the problem as one of providing point-to-point data transmission between two entities. Unlike the network layer with all of its switching and routing needs, the transport layer is relatively simple. You might think of the transport layer as the layer that separates applications on a host from the actual network; with the transport layer handling the communications, your applications can communicate with another host across the network as easiliy as if they were local.The important issues in the transport layer are:

- The service model

- The multiplexing model

The Service Model

The service model is the type of service provided to the upper layers and while this could be a variety of things, there are two that we will study - reliable stream service and unreliable datagram service. The reliable stream means that the transport layers guarantees the packets arrive in order and there are no duplicates or data errors. A stream service views the data as a series of bytes rather than as a collection of messages, so the upper layers don't have to worry about creating specific message lengths or message boundaries. Unreliable means that not all, if any, of the possible error conditions are prevented, and a datagram service means that data is seen as a series of variable length messages (not a stream of bytes).The Multiplexing Model

All the layers in the network protocol stack have concerns with multiplexing, but it is very important at the transport layer. This is primarily because the transport layer is the interface between the many networking applications running on a host with all of the processes they are communicating with somewhere else on the network. For example, on a large server you might find 20 people running web browsers that are connected to 20 different web sites; 25 remote browers connected to your local web server; the mail server currently sending or receiving mail with 10 other sites, 10 telnet sessions and 8 ftp sessions all in progress simultaneously. All of these go through the transport layer and it has to insure that all messages are delivered to the right process on the right host. The transport layer has to multiplex all of these concurrent demands over the one (or small number) of connections to the network.In the Internet world, this is handled by ports, which are simply integer numbers representing a location in a map. This map tells the transport layer what to do with communications that are being sent through a port in either direction. Any process desiring to communicate to processes on other nodes finds an available unused port and tells the transport layer (through the operating system) where to send outgoing or incoming data. In the Internet model, ports are integers from 0 to 65,535.

Unreliable Datagram Service

The unreliable datagram service in the Internet protocol stack is called Universal Datagram Protocol or UDP. This is an unreliable simplex protocol that simply drops the datagram to the network layer and forgets it. While ICMP does provide some measure of reliability, UDP is under no obligation to provide much of anything.

The packet format of UDP is:

Notice that UDP has to handle the multiplexing problem; what process on this host should get the incoming message. The answer is whichever process currently has opened the destination port for UDP data services. The Checksum is the same checksum that IP uses with the addition of the first four bytes of the UDP header. This is a little strange and seems contrary to our protocol stack philosophy that different layers are independent. Here, the designers of UDP wanted to be able to double check and insure that the message was delivered to the correct host. Finally, the length is the number of data bytes (not including header) in the message.

Reliable Stream Service

Reliable stream service in the Internet protocol stack is provided with the Transmission Control Protocol or TCP. The packet format for TCP is:

As with UCP, TCP has to handle the multiplexing problem and it uses the port concept as well. Based on the next two fields, Sequence Number and Acknowledgement Number, you might be suspicious that TCP is a sliding window protocol, which it is. TCP has options, so the Header Length is needed to know where the data starts. Advertised Window Size is used in controlling the sliding window protocol. The Checksum is exactly as in UDP and the Urgent Pointer indicates if there is urgent data in the packet and where it is located.

The TCP flags are:

- SYN - sync or open indicator

- FIN - close indicator

- RESET - reset the connection by redoing the three way handshake

- PUSH - This packet is being pushed so tell the receiver

- URG - The urgent pointer is valid

- ACK - The Acknowledgement field is valid

The SYN and FIN flags are used to open and close a connection respectively. RESET can be used to reset the parameters of a connection is one end feels that it is confused by the current state. The Push flag is used to indicate that the packet is being sent due to orders by the controlling upper layer and will be discussed later. The URGENT point flag is used to indicate that the data contains data that requires immediate attention and will be discussed later. Finally, the ACK flag indicates that the value in the Acknowledgement Number field is meaningful.

So there are a few things that need to be explained, but first, lets see how TCP implements a sliding window protocol.

TCP Sliding Window Management

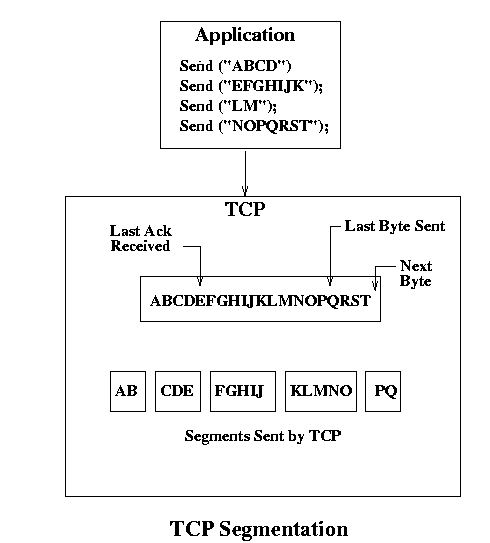

TCP, being a stream protocol, does not recognize message boundaries and simply treats all incoming data as continuous stream of bytes. In the example below, the application sends 4 messages, which TCP buffers and then sends to the other end as TCP segments. This is the term used to identify a block of data sent by TCP, that has nothing to do with how they may have originally been send by the application.The application sends its messages, which are then buffered by TCP as a continuous stream of bytes. TCP then decides according to its sliding window policies to send data with certain segment sizes. In this case, it sent a two-byte segment, then a three-byte, then two five-byte segments and finally a two-byte segment. It has gotten acknowledgements for the first

two, and it has three bytes in the buffer that haven't been sent. While this may seem confusing, it is a very efficient implementation of a sliding window protocol and allows TCP to have very good control over the data it is managing. It only requires one buffer, which defines the maximum window size, but the window size itself can be adjusted dynamically via the Advertised Window Size field.

One the receiving end, Next Byte Expected and the Advertised Window Size specify an amount of information that can be received and stored while waiting for Next Byte Expected to advance, which only happens when a segment arrives that includes the next byte expected. TCP acknowledges data as it is received, and not after it is sent up to the higher layer.

So how does TCP decide what to send? It depends on the window size and the amount of unack'ed data that has been sent, and on a couple of other things. If enough data accumulates in the buffer, it will send a segment. The Maximum Segment Size is a quantity that limits the maximum amount of data that TCP can send at any one time. This is a value that is set when connection is established and can be up to 65,535, but is by default 536 bytes. If MSS bytes are in the buffer, and the receiver has a large enough window size, it will send a segment. TCP also doesn't like its connections to be idle for long, so if no data is sent for a period described by the inactivity timer, it will send any data in the buffer, or an empty packet if there is nothing available. If it receives a packet with an acknowledgement from the other end, it will send any data available in the buffer. Finally, if a message is delivered with the PUSH flag set, it sends everything in the buffer (possibly more than one segment).

The algorithm for the sending side is:

- If Next Byte + Message Length > Last Ack Received, Block

- Otherwise, put message in buffer and Next Byte = Next Byte + Message Length

- If (Next Byte - Last Byte Sent + 1) > MSS, send a segment

- If Push Operation specified, send a segment

- If the inactivity timer expires, send a segment

- Last Byte Sent = Last Byte Sent + Segment Size

- If Ack received, Last Ack Received = Ack Number - 1 send a segment if data pending.

Similarly, the receiver algorithm is:

- If Last Ack Sent < Sequence Number + Segment Length drop packet, send Ack (Next Byte Expected).

- If Last Ack Sent >= Sequence Number + Segment Length, put message in buffer at the location indicated by SequenceNumber. Update Next Byte Expected to be Sequence Number + Segment Length, plus any contiguous data already received..

- If Next Byte Expected > Last Ack Sent, send the data up when requested, ACK and move Last Ack Sent.

An interesting thing can happen with the TCP sliding window implementation. In the previous example, suppose that the segment containing "CDE" is lost. Eventually the timer for that segment expires and is resent, but TCP doesn't keep a record of segment boundaries. So what gets sent might be "CDEFG". At the receiving end it doesn't matter, because it will store the data and advance Next Byte Expected, completely ignoring that some of the data might be duplicated.

Sequence Numbers

TCP uses 32-bit sequence numbers to avoid wrapping around and encountering the delayed packet problem. When systems and networks were slower, that seemed like it would never happen. But if a system can send 100,000,000 bytes per second, the sequence counter will wrap around in 42.9 seconds. Is it possible for a delayed packet to hang around in the network that long? Probably, so as network speeds increase, the limited sequence number size becomes a problem.Starting Sequence Numbers

Due to the potential for delayed packet problems, TCP connections don't start the sequence numbers at zero. Instead, they generate a random number to use, and both sides generate their own.

TCP Options

TCP has a very limited set of options. They are:- kind = 0, end of options

- kind = 1, no op

- kind = 2, len = 4, maximum segment size in bytes, only during an open or reset, it requests or acknowledges an MSS

- kind = 3, len = 3, window scale factor, changes the scale factor of the Advertised Window Size from 1 byte to 2^N bytes. For example, if this value is 2 and the AWS is 60,000, then the AWS is actually 60,000 x 2^2 = 60,000 x 4 = 240,000 bytes.

- kind = 8, len = 10, timestamp

Urgent Data

The term urgent data is somewhat of a misnomer, but it indicates that the sender has something that needs to be processed immediately. For example, suppose that you have an application that performs database operations based on commands from a user. The user is entering commands and typing ahead, when he/she notices that a previous command is wrong and should be stopped. He/she types a control-C to stop it. But this character is transmitted like any other character by TCP and it will be buffered on the other end and processed when the receiving end gets around to it. If the control-C were sent with the URGENT flag set, when it arrived at the other end, the application would receive a notification signal that urgent data was present and it could read the data out-of-order and stop the offending process. The URGENT pointer in the TCP header simply points to the location where the urgent data is to be found in the segment.Three-way Handshake

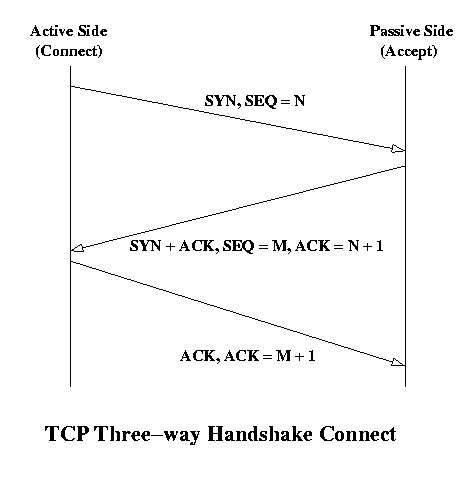

The three-way handshake is the way in which TCP insures that both ends are in agreement on the sequence numbers to use before actually sending data. Diagrammatically, the three-way handshake functions like this:The objective of the three way handshake is to insure that you don't inadvertently create a connection that should not exist. Remember that delayed packets can show up and inopportune times, so this procedure tries to make the likelihood that a delayed "connect" packet doesn't create a connection and also that the two endpoints are synchronized with regard to their starting sequence numbers.

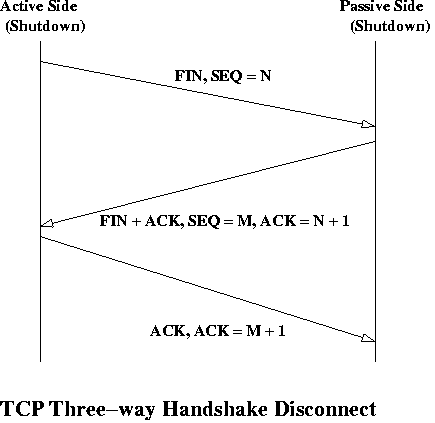

There is also a three-way handshake for termination:

The purpose for this procedure is to insure that both endspoints have the opportunity to deliver any data in their buffer and to receive any data that might be in transit. Since either end can withhold an ACK for as long as needed, this will guarantee a proper shutdown of the connection. For those of you that write socket-layer stream programs, this is why the shutdown command should be used with an appropriate parameter specifying the intentions of the active side.

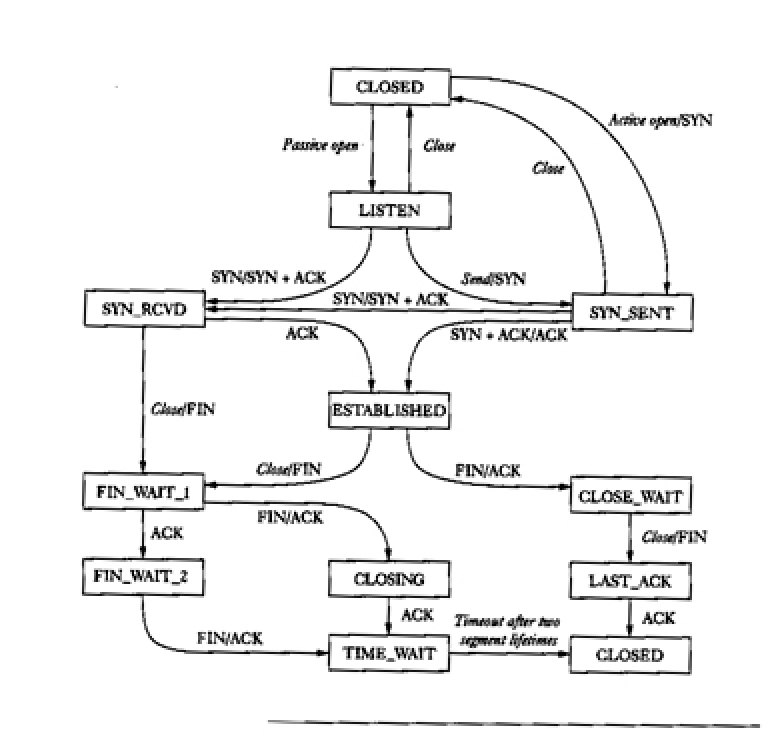

The State-Transition Diagram

One way to describe a protocol is with a state transition diagram, which is basically the same thing as a finite state automata, although liberties are sometimes taken. For TCP, the following is a state-transition diagram that shows the properties of the protocol.Most of this diagram is concerned with connecting and disconnecting, and the entire sliding window management process is encapsulated in the Established state. It would be possible to produce a state-transision diagram for this part of the process also. Compare the three-way handshake and three-way shutdown processes to the diagram and identify the critical parts.